Introduction

Elasticsearch is a powerful search engine that allows you to store, search, and analyze large amounts of data quickly and in real-time. It's designed to be scalable and can handle large amounts of data. One way to load data into Elasticsearch is by using a bulk API approach.

In this approach, you can use a docker image to read data from files and then send it to Elasticsearch in bulk. This saves time and resources as you don't have to send each record one by one.

This docker image can be used to load large amounts of data into Elasticsearch quickly and efficiently.

Prerequisites

To execute this solution in a containerized environment and establish a connection with the pre-running Elasticsearch to import the data, the following tools are essential.

Setup

Please adhere to the following instructions to establish this solution.

1. Clone this repository to your local machine

git clone https://github.com/raowaqasakram/elasticsearch-bulk-loader.git

2. Update the configuration files

Navigate to the

configsfolder.Open the

index_mappings.jsonfile and update it with your index mappings.Open the

index_settings.jsonfile and update it with your index settings.

3. Place JSON files

Navigate to the

jsonDatafolder.Put all the JSON files you want to load into Elasticsearch in the

jsonDatafolder.

4. Docker Compose configuration

Open the

docker-compose.ymlfile.In the

environmentsection, update the following line with the elastic search index name where you want to load the data. For example,

- ES_INDEX_NAME=elon_data_index

- Make sure to link this application with the network where Elasticsearch is already running.

networks:

bulk_data_network:

# Replace 'elasticsearch_existing_network' with your actual network name of Elasticsearch

name: elasticsearch_existing_network

external: true

Load Data to ES Index

Once all the aforementioned configurations have been completed, you can execute the container by utilizing the provided command.

sudo docker-compose up

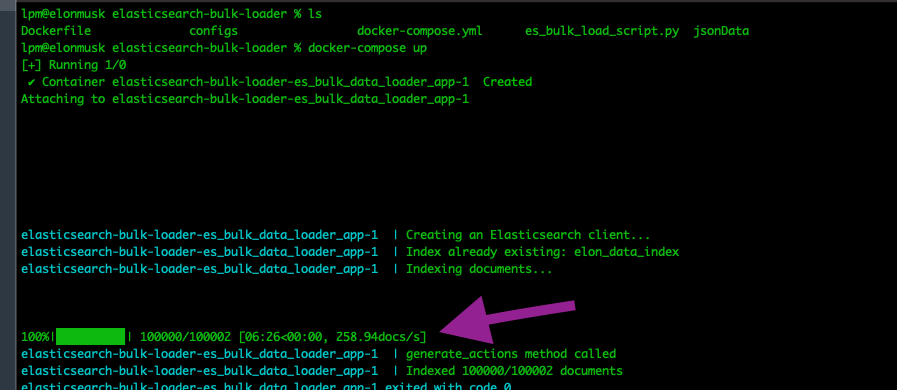

Console Output

Upon executing the aforementioned command, the console will display relevant output.

Based on the system configurations (Apple M1, 8GB RAM) that I have, it required approximately 6 minutes and 26 seconds to complete the loading process of 100,000 JSON files into an Elasticsearch index.

Important Point

One crucial point to highlight is that the process will create a new index if it does not already exist in Elasticsearch, and it will load the data accordingly.

However, if the index has already been created, the process will load the provided JSON files from the jsonData folder into the specified index mentioned in the docker-compose.yml file. By following this approach, your existing data in the index will remain intact and unaffected.

Verifications

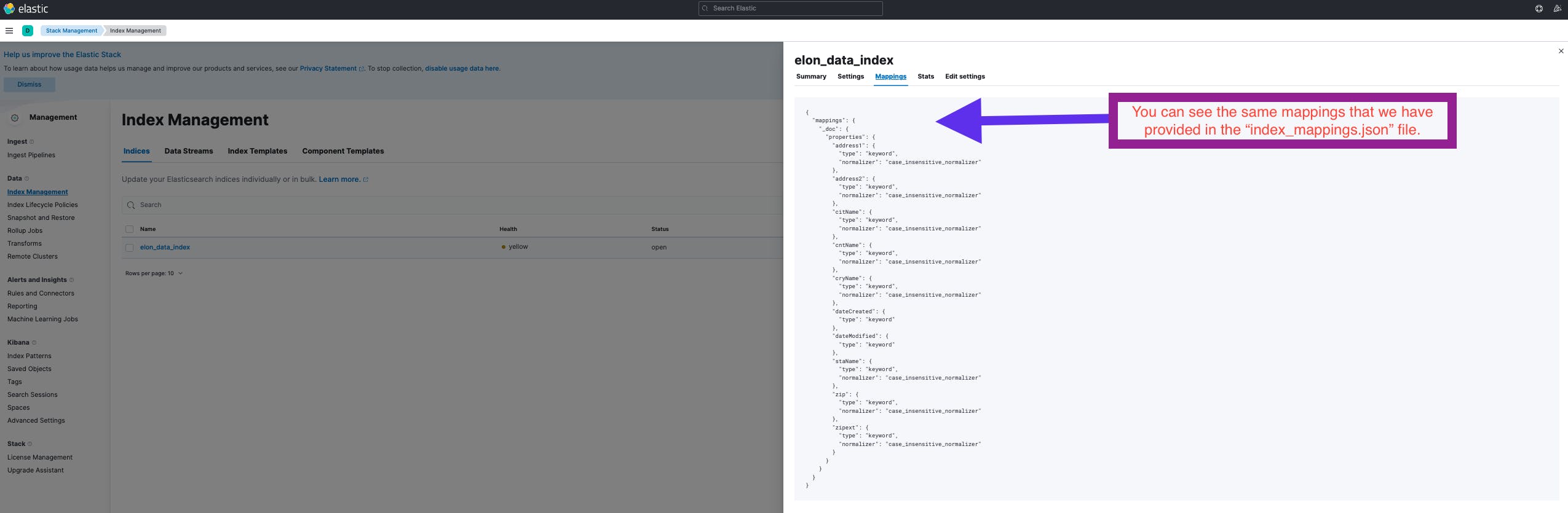

Index Mappings verification

Upon inspection, it becomes evident that the mappings specified within the index_mappings.json file are indeed utilized during the creation process of the Elasticsearch index.

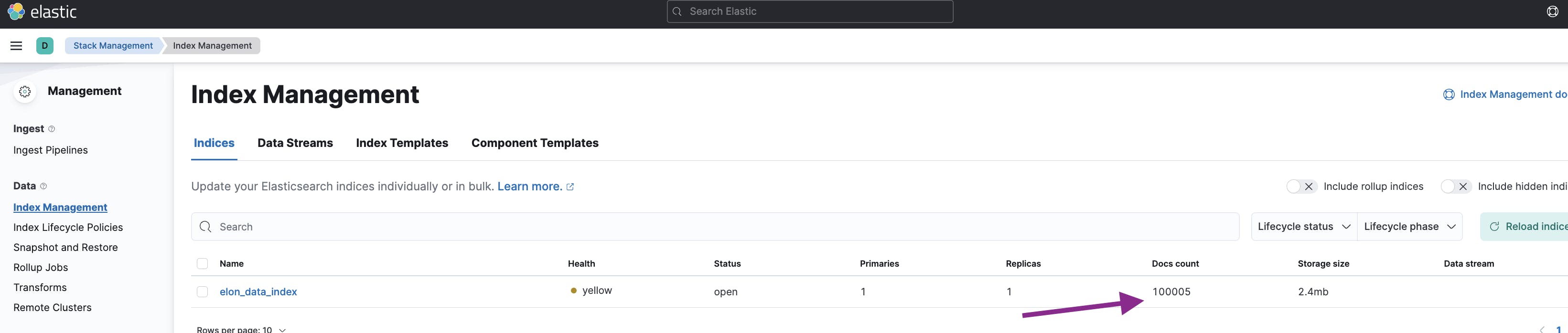

Bulk data load verification

It can be observed that the index named elon_data_index contains an aggregate of over 100,000 files that have been successfully loaded.

Conclusion

In this article, we have discussed how to load bulk JSON data into Elasticsearch using Bulk API and Docker. This approach allows you to load large amounts of data quickly and efficiently.

We have also discussed how to update the configuration files and how to run the docker image using Docker-compose. This solution can be used to automate the loading of data into Elasticsearch, saving time and resources.

References

GitHub

A Python script to load the data using Elasticsearch Bulk API.

Docker Hub

The docker image is pushed to the docker hub.

Elasticsearch Bulk API